As many SEO’s and Web Developers may be aware, the Google Search Console fetch and render tool is great for identifying issues with render-blocking elements on the page. It allows you to get an instant snapshot of the code and rendered page that Googlebot sees when crawling the specified page on a given website.

What is the Fetch as Google Tool

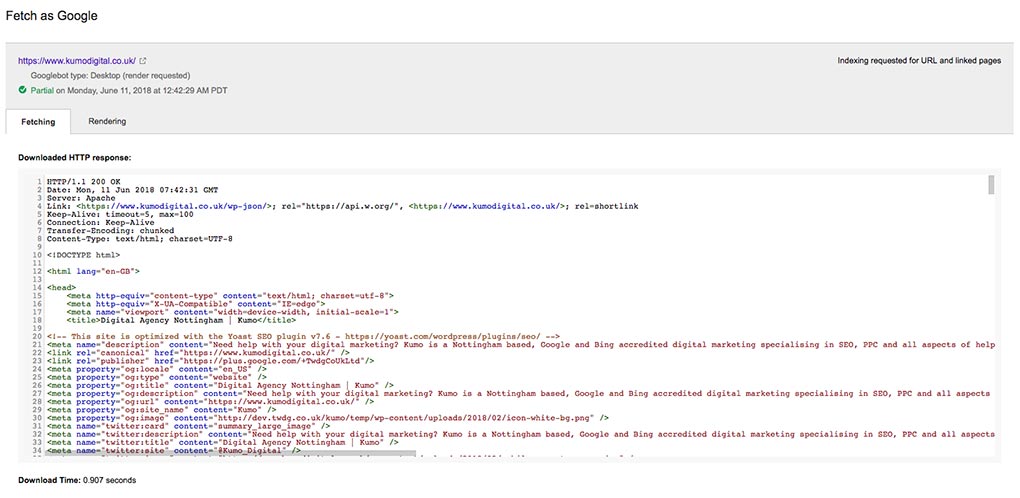

The ‘Fetch as Google’ tool within Search Console essentially requests the page entered to be fetched by either the Desktop or Mobile version of Googlebot (whichever you specify) and returns a small report on the page, which is split into ‘fetching’ and ‘rendering’.

The ‘fetching’ report generated returns the page’s response code, downloaded HTTP response, including the markup of the page and the download time.

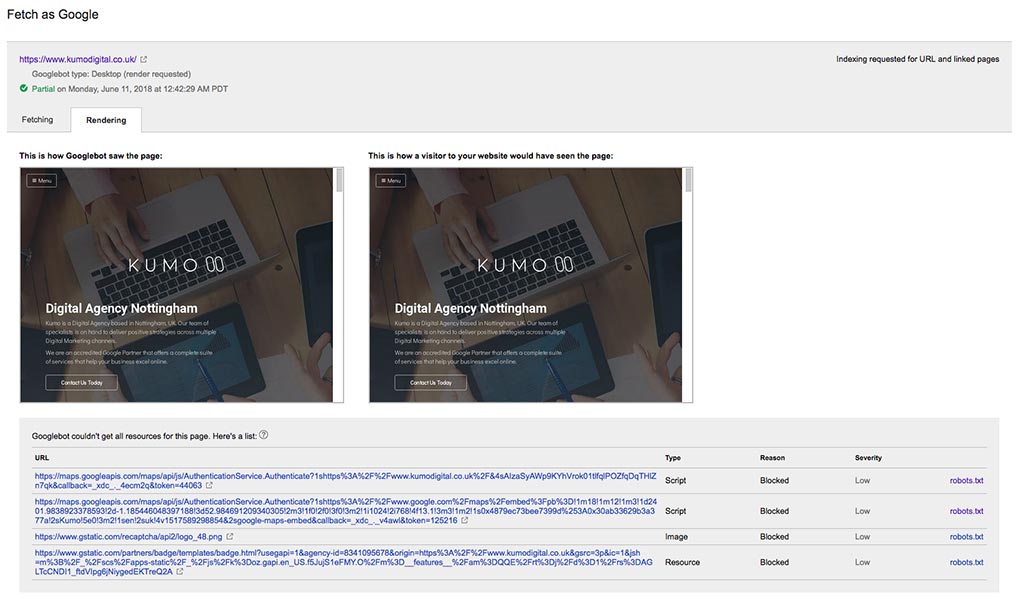

The ‘rendering’ report returns more of a visual representation of the page. Two iframes display the visual representation of the rendered page for “how Googlebot saw the page” and “how a visitor to your website would have seen the page”. There is also a list of resources that Googlebot could not render, listed in a table under the heading “Googlebot couldn’t get all resources for this page. Here’s a list:”.

Pay careful attention to the resources listed within the table to “get optimal indexing of your content: make sure Googlebot can access any embedded resource that meaningfully contributes to your site’s visible content, or to its layout”.

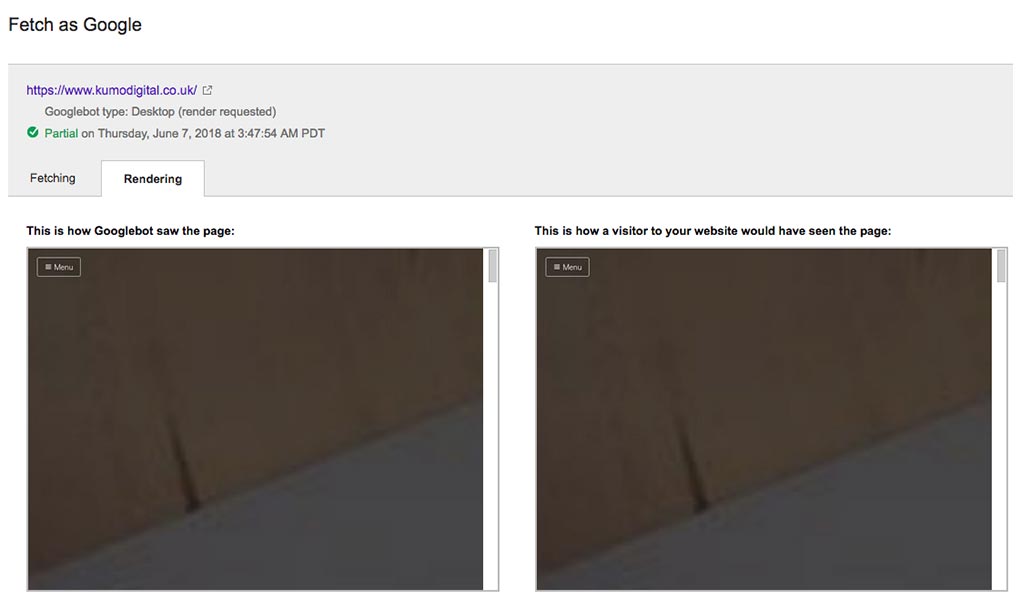

The same attention needs to be paid to the visual renders of the page’s content. The visual render for both how the user and Googlebot, especially Googlebot, see the page is imperative for identifying any potential issues that Googlebot has rendering your page. This tool is how the CSS v height bug has been identified by webmasters, along with identifying other elements that may cause blockages whilst the page is being rendered.

You may be asking why Googlebot having a render issue is a problem when the site renders as desired within the end user’s browser. Well, from an SEO perspective, it would be a huge hindrance if the Googlebot visual renderer could not render, crawl and read all of a page’s content.

What is the Bug with CSS Vertical Height Attributes?

A bug within the Search Console visual render tool has been identified by a handful of webmasters on Google Product Forum threads and a StackOverflow question:

https://productforums.google.com/forum/#!topic/webmasters/jXqRcnWN8Ng

https://productforums.google.com/forum/#!topic/webmasters/8ZuoXjg-Cf4

The bug is encountered when an element has a CSS vertical height (vh) value on a height property. This happens due to the element with the vertical height (vh) value fills the renderer’s viewport height.

It appears and is speculated that the renderer views the page within a 1024×768 viewport, checks how tall the window is.scrollHeight is, then resizes its virtual browser to be the same height as the window.scrollHeight. A screenshot of the result is taken and displayed on the report within Search Console, showing the first element with a vertical height value, filling the remainder of the page, and not displaying any further elements below it within the page’s markup.

Within the first Google Product Forum thread listed above, this bug appears to stop Googlebot from crawling all of the page’s content and causing poor performance of the page within the SERP’s.

The user Lukeg4 states that implementing a fix for the vh bug, he saw a huge difference in rankings:

“It made a huge difference.

The webpage has gone from being rated like junk to being rated far, far better. It’s still not where I’d like it to be, but that’s another issue for another time.

Anyway, after the changes, the SERP location of the page has improved massively – over 300 positions – and levelled out, where before its performance was extremely poor and highly erratic.”

His results from implementing a fix would appear to indicate that “Google penalises any content that is not seen in its render”, so rectifying this bug on any site that encounters it is a must.

The Fix

The fix that we have found, along with others who have encountered this issue, is to add a css max-height: value in pixels to the element that has a height: specified using a vh value. This should rectify the issue within Googlebot’s visual renderer, filling the entire tools window with a single element and blocking further elements from being rendered.

An example element with both CSS height and max-height values is shown below.

.my-element {

height: 100vh;

max-height: 1080px;

}

You may wish to set a max-height value in px that meets your requirement per element; the value in the example above is not detrimental to the fix; however, it needs to be high enough so that Googlebot renders the element with enough height to view the content contained within it.

Testing Page Renders in Screaming Frog SEO

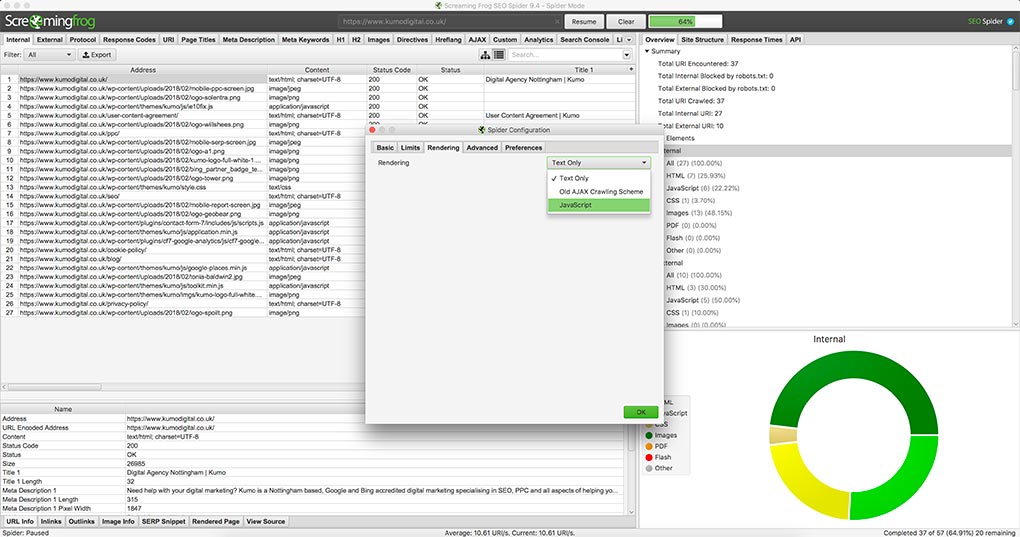

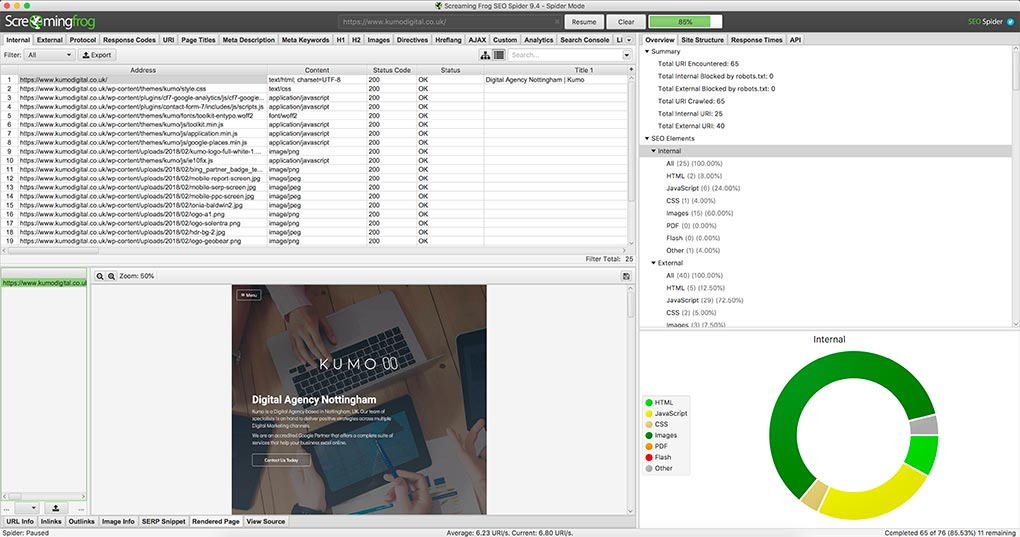

Rather than constantly waiting for Google Search Console to fetch your desired URL and render it to test your implemented fixes, the render feature within Screaming Frog SEO tool.

This tool is outlined here: https://www.screamingfrog.co.uk/seo-spider/user-guide/tabs/#rendered-page.

To utilise this feature, you will first need to change the spider prefs to support JavaScript. This mode for the spider is enabled in the main menu: Configuration > Spider > Rendering tab > Rendering drop-down> Javascript.

There are also configuration options available to set the window size for both Googlebot Desktop and Googlebot Mobile: Smartphone to mimic the renderer window sizes used within Google Search Console.

Once that is enabled, simply enter a URL and initiate the crawl, select the URL within the list and ensure that the ‘render’ tab at the bottom of the Screaming Frog window is selected.

Author Biography

Mathew

A 14 year industry veteran that specialises in wide array of online marketing areas such as PPC, SEO, front end web development, WordPress and Magento development.

Accredited Google Partner & Bing Ads qualifications, BA (Hons) in Digital Marketing. One half of the Director duo at Kumo.